This blog gives you an overview on how to run Large Language Models (LLMs) locally using Ollama.

Ollama enables you to run the different Large Language models locally on your own Computer. This enables developers to quickly create a sample / prototype around an LLM use-case without having to rely on the Paid models.

Another advantage is that the data stays within the local machine.

Perform the following steps to setup and run an LLM locally:

- Goto Ollama website and download the latest version as per your operating system

- Run the installer to setup Ollama locally

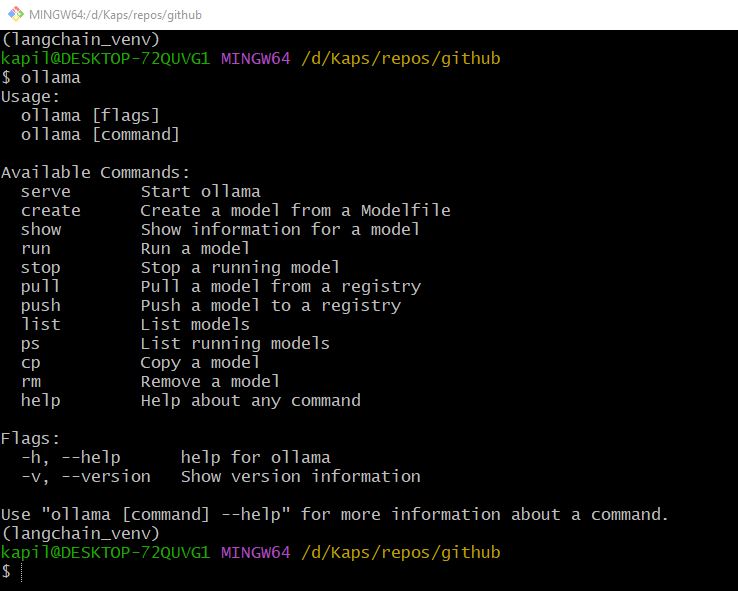

- Once the setup is complete Ollama should be installed on your local machine and you can check by issuing the following command from your bash shell / command prompt

ollama

- If you see the message as shown in the above screenshot, Ollama is successfully installed on your local machine.

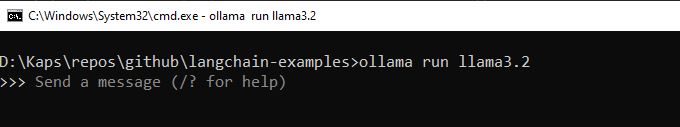

- The next step is to download an LLM that can be used via Ollama. Let’s say we want to run llama3.2 model from Meta in our local. Execute the following command to setup / run llama3.2 model

ollama run llama3.2as shown in the screenshot below

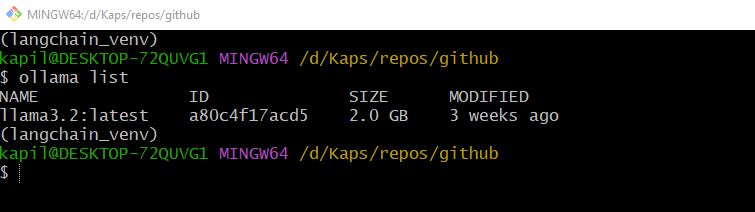

- To list the LLMs that are installed locally on your machine run the following command:

ollama list

- Cheers ! You are now all set !!

- Go ahead and try out the LLM locally.

So that’s it for this post.

See you until next time. Happy Learning !